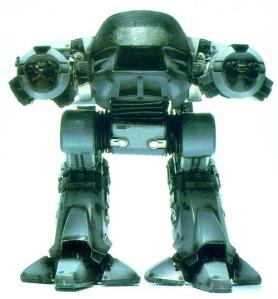

Ethics of Killer Robots

Would you deploy a new military robot, armed to the teeth and more operationally capable than any human soldier, if it was programmed with a detailed set of ethical rules? This is the intriguing question asked by Russell Blackford over at the IEET.

These rules would encourage the robot to act within current international laws of war, and enable it to complete its objectives with a minimum of collateral damage and loss of civilian life. In fact, by all measurable accounts, it performs better than human soldiers:

These rules would encourage the robot to act within current international laws of war, and enable it to complete its objectives with a minimum of collateral damage and loss of civilian life. In fact, by all measurable accounts, it performs better than human soldiers:

The T-1001 is more effective than human soldiers when it comes to traditional combat responsibilities. It does more damage to legitimate military targets, but causes less innocent suffering/loss of life. Because of its superior pattern-recognition abilities, its immunity to psychological stress, and its perfect “understanding” of the terms of engagement required of it, the T-1001 is better than human in its conformity to the rules of war.

Sounds pretty appealing; a device of any nature that can end wars with a minimum of damage and loss of life sounds pretty appealing. But what if something went wrong?

One day, however, despite all the precautions I’ve described, something goes wrong and a T-1001 massacres 100 innocent civilians in an isolated village within a Middle Eastern war zone. Who (or what) is responsible for the deaths? Do you need more information to decide? Given the circumstances, was it morally acceptable to deploy the T-1001? Is it acceptable for organisations such as DARPA to develop such a device?

I think there are a lot of ethical issues tied up in this thought experiment. One of the most interesting is this idea of attribution of responsibility. We naturally want to assign responsibility to a moral transgression. Why? Because if an agent commits an impermissible act once, we assume the likelihood of them committing another impermissible act increases – i.e. one moral transgression reduces our trust in that agent and reduces their reputation.

But can the robot be morally responsible? Intuitively, no. It would have to be the programmers, or the mission assigners – unless we could reliably demonstrate that the robot somehow qualified as a moral agent. But the programmers and mission givers are further removed from the massacre, and intuitively we treat individuals further removed from an incident as less morally culpable.

Or perhaps the massacre can be boiled down to a fault in the programming that led to unanticipated consequences, in which case the moral responsibility is dissolved somewhat because the massacre wasn’t an intentional act; it was an accident, even if, from the ground, a robot stomping or rolling around shooting civilians certainly looks like an intentional act.

Or perhaps the massacre can be boiled down to a fault in the programming that led to unanticipated consequences, in which case the moral responsibility is dissolved somewhat because the massacre wasn’t an intentional act; it was an accident, even if, from the ground, a robot stomping or rolling around shooting civilians certainly looks like an intentional act.

My point in all this is the robot massacre example pushes a lot of intuitive moral buttons, but the moral salience of the case is far harder to judge. The robot is not a moral agent, yet it’s being intuitively judged as such. And until we can untie this knot to view the robot for what it is, or become comfortable with its diminished moral capacity, then we’re going to be conflicted over such situations.

On another level, I wonder whether such a thought experiment even represents a realistic scenario. I’m highly sceptical that a moral code written out as a series of propositions – ‘don’t kill or injure civilians’; ‘unless it’s as the unintended consequence of an act that prevents the death or injury of more civilians’; ‘but only when such death or injury of more civilians is reliably predictable’ etc – can ever equip a robot to make reliable moral judgements. The series of moral propositions, I believe, would present a kind of moral frame problem. It would either lead to anomalous results or paralysis.

My view of morality is that it is heavily emotion- and intuition-laden – often with conflicting and competing emotions and intuitions. And it’s the interplay between these forces plus reason that results in moral behaviour that we would recognise as being human-like. A robot, presumably absent emotion, would make moral judgements in a very different way, if at all.

Then there’s the issue of robots becoming self-aware, which could possibly lead to them valuing self-preservation. Add the ability for them to secure their own resources, replicate and adjust their design from one generation to the next, and we’re in an entirely different ballgame. Then natural selection takes over: those robots who are better able to reproduce will be more successful. And if they then come into competition with human interests, we could be in trouble. Sounds sci-fi, but it’s just ‘natural’. And we’d be ill advised to forget this prospect when designing our warrior robots.

0 Comments