Concrete Cognition and the Cogs of the Brain

It’s somewhat unfashionable in polite circles to refer to the brain as a machine. But I reckon that’s precisely what it is. This isn’t in any way diminishing the wonder of the mind or the brain, but the notion, when understood, dramatically elevates the wonder we ought to feel for machines.

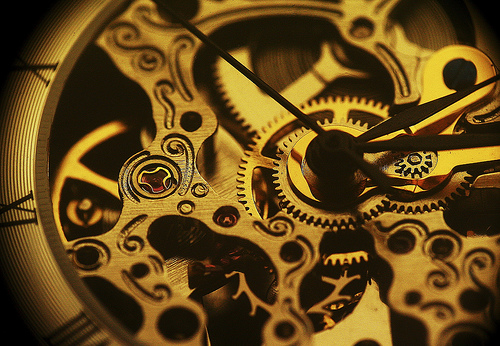

And I use the word “machine” deliberately rather than “computer.” It’s actually both, but the machine comes first. It’s in the properties and interactions of the cogs of the machine that we can ultimately find intelligence, and it’s insufficient to refer only to symbol manipulation or cognitive models. We must see that intelligence is built in to the physical properties of the brain. But in a particularly clever way – but not fundamentally much more clever than an abacus.

This approach also sheds light on why I find so distasteful the notion that all knowledge is knowledge-that – i.e. propositional or explicit knowledge that can be captured in propositional form, such as “I know the sky is blue.” I far prefer to start with knowledge-how – concrete knowledge and abilities, and things like “I know how to ride a bike” – as the foundation of knowledge.

Let me explain:

When in London on my recent European jaunt I was privileged to see the first working example of Charles Babbage’s Difference Engine at the wondrous Science Museum. This famous artefact was the forerunner to today’s computers, but it’s not just its sophistication that is profound, but that it’s not quite as sophisticated in design as a modern computer. Instead it falls at a glorious mid-point between the abstract and the concrete that beautifully illustrates how the brain itself is a machine – a computing machine.

The key is that in the Difference Engine is a mechanical calculator; through the manipulation of mechanical parts it is able to produce the correct answer to a particular mathematical problem (within a particular range of mathematical problems).

Crucially, it is able to produce this answer by virtue of the physical properties of the machine itself. If it had different physical properties – say the cogs were of a different diameter or with a different number of teeth – it would produce a different result. As such, in an important sense, the information pertaining to these problems is contained within the mechanical properties of the machine.

A far simpler example might be a piece of string tied on one end to a pin. Revolve that string around the pin and the other end of the string will describe a circle. In some important sense the information about describing the circle is contained within the mechanical properties of this system.

A better example might be a mechanical model of the solar system, an orrery. Such a device can be used to solve problems about the relative position of various planetary bodies at various times, and it does so purely by virtue of the mechanical properties of the system.

Another spectacular example is in Tim van Gelder’s wonderful 1995 paper, What Might Cognition Be, If Not Computation? in The Journal of Philosophy. He talks of the Watt centrifugal governor, which is effectively able to run a complex computation in real-time, without abstraction, simply by virtue of the properties instantiated in the system.

Yes, one can abstract away from the concrete system and describe the information in a disembodied way, as a formula, an algorithm or a computational process. But that doesn’t diminish the fact that in an important sense there is information contained within certain mechanical systems simply by virtue of their physical properties.

This means these systems can perform computational tasks of greater or lesser sophistication. Clearly the Difference Engine can perform a far wider range of computations than can a piece of string and a pin. But compute they both do.

The problem with concrete systems is they are relatively static. A governor might be brilliantly subtle in regulating the pressure in a pipe but it’s dreadful at telling you the position of Saturn relative to Mars. To put it another way, the information embodied in a cog is fixed, and if you want to change it, you need to change the cog. Or change the way it interacts with the other components of the concrete system.

One way to increase the complexity and flexibility of a concrete system is to increase the number of cogs. More cogs means more embodied information in the system and more ways of interacting, thus greater complexity. But it doesn’t take long before it becomes infeasible to add more cogs.

Another way is to arrange your finite number of cogs in a way that allows them to interact in different ways. That’s how the Difference Engine differs from the centrifugal governor. Each different configuration of cogs embodies a different bunch of information and/or processes it can execute. Yet the Difference Engine is still limited in the number of configurations it can embody.

What you really want is some kind of concrete system that can be configured in an effectively infinite number of ways – a system that employs recursion. This way it can embody an infinite range of abstract systems, information or processes. That’s basically the successor to the Difference Engine, Babbage’s other great invention, the Analytical Engine.

And that’s basically our brain.

Our brains are not fundamentally different to a mechanical computation system – with neurons instead of cogs embodying information, and the interactions embodying processes in concrete form – except our brains can reconfigure themselves to embody a vast, if not infinite, range of computations.

Our brains are effectively a system of cogs that achieves complexity not only through more cogs (although 100-odd billion is a lot of cogs) but through the reconfiguration of those cogs.

These configurations layer new interactions on top of each other until you get to something capable of a startling array of abstract computations – even to the extent of abstractly reflecting on its ability to abstract.

Now, all of these concrete systems, including the brain, can be represented in an abstract form. They all embody some formulae, processes or algorithms – or cognitive models – which can be separate from the system itself and reproduced in a computer. That’s interesting, but that doesn’t mean the concrete system resembles a computer as such. There will also be some information embodied in the concrete system that cannot be represented in a computer – some computations require the specific concrete properties of the system, much like the centrifugal governor can compute in a continuous way that a serial computer cannot do.

The embodied knowledge is also present in our bodies. When I say I know how to ride a bike, I’m not just talking about what’s going on in my brain – certainly not just in the higher cognitive bits – but also in my body. To say that knowing how to ride a bike is simply knowledge that entirely misses the point.

Likewise, to say that all cognition is symbol manipulation misses the point. Cognition might be describable in such terms, but understanding human cognition in humans requires us to understand the cogs in the machine and the embodied knowledge they instantiate.

Now, what I’m saying here will probably either not surprise you at all (because I’m a latecomer to Gelder’s paper), or it’ll make you spit and swear that I’ve missed the point. Either way, fair enough. But wary be if you ignore the concrete, because cogs are smarter than you might think.

4 Comments

Donal Henry · 14th June 2011 at 3:53 am

Your article parallels a talk I watched recently. Your notion that “the information pertaining to these problems is contained within the mechanical properties of the machine” ties in nicely with the speaker’s point that the limits of computation are really the limits of physics. For example, if we could go faster than light, NP problems could be reduced to P (I think I’m saying this correctly).

simbel · 14th June 2011 at 8:58 am

I think your view of the mind is still too brain-centered. Also, the way you use the term «embodied» is not entirely convincing—I would rather use something like «embedded» or «intrinsic to» when speaking of information entailed in the structure of a system. You are, however, spot on regarding abstract vs. concrete systems.

In the embodied cognition paradigm embodiment means that cognitive processes—thoughts, beliefs, feelings, perceptions, the whole lot—are shaped by the way the cognitive agent is built and how it, being an embodied mind, interacts with the environment. The brain is an important part of this picture, yet it is only a part. And I would argue that, in a way, the number of cogs is actually infinite, if we take into account how the brain couples with the environment to allow for cognitive coupling.

Strongly suggested reading is John Haugeland’s «Minds Embodied and Embedded», it’s even more seminal that van Gelder’s piece. He discusses at length Simon’s Ant, perhaps you remember me mentioning that example. It’s relevant to understanding cognition that does not require mental representation in the traditional cognitivist sense.

simbel · 14th June 2011 at 9:00 am

Two typos. Sorry.

Tim Dean · 14th June 2011 at 11:17 am

Yep, that makes perfect sense. I’ll replace my usage of “embodied” with “intrinsic”. I’ve used the term “intrinsic knowledge” before – don’t know why I didn’t employ it again this time.

Then I think my brain-as-machine-with-intrinsic-knowledge thing will then be able to slot into the broader embodied knowledge programme.

And will read the Haugeland piece next!